SLAM

Simultaneous Localization and Mapping

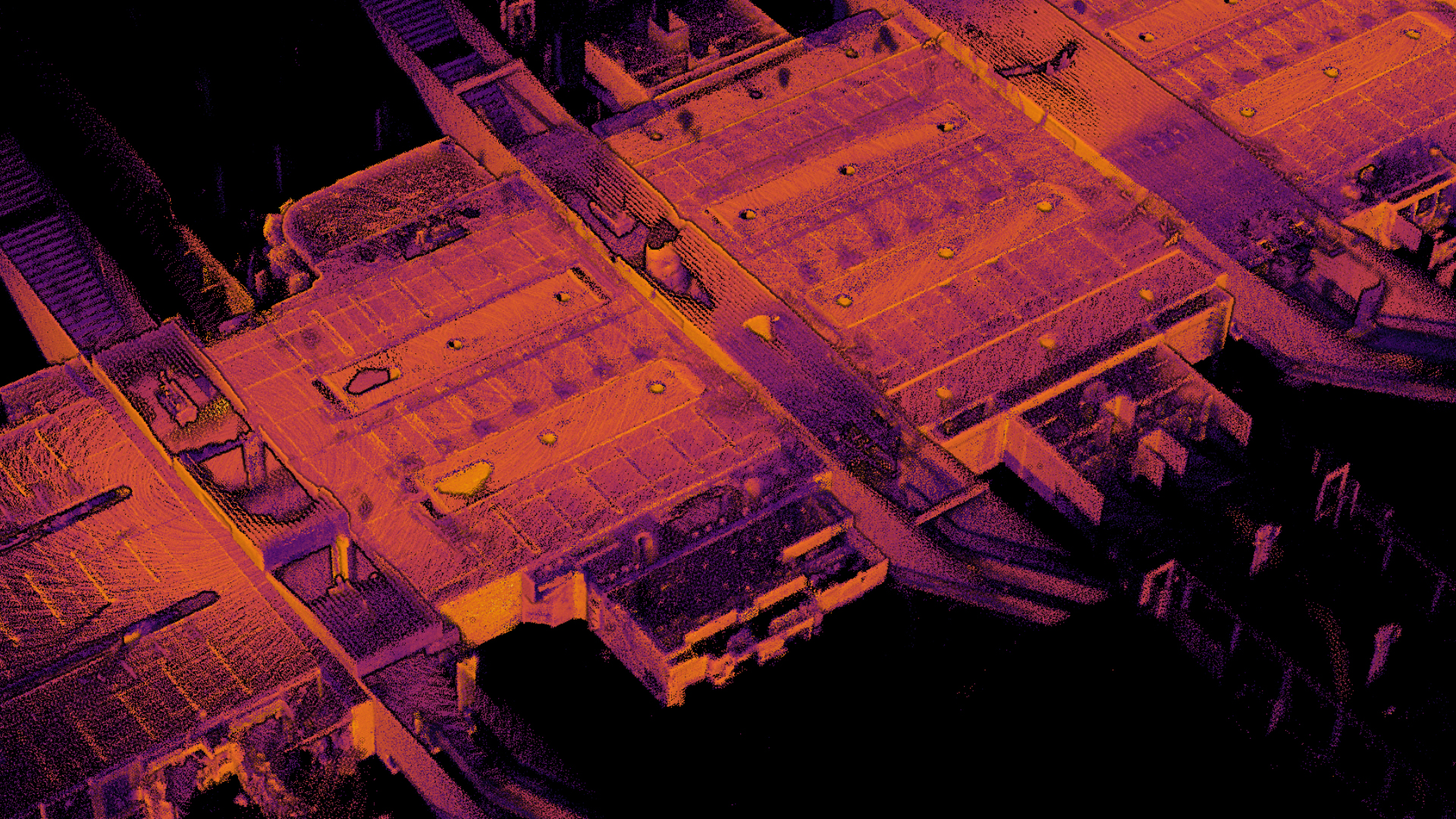

Accurate Scene Reconstruction using LiDAR

LiDAR is a great technique to collect spatial data. Where a photo would capture in detail what colour a point in a scene has, the LiDAR image would capture the exact location of the point. This makes LiDAR data useful for when we need to measure distances in the real world.

A drawback of LiDAR, compared to photographs, is its limited resolution. A consumer grade photo camera would have about 30 times the information density than a state of the art LiDAR sensor.

While the LiDAR images have great precision, they do not have a lot of detail. So it can give you the exact distance between two points, but if and only if you have actually observed both points simultaneously.

A single frame does not provide us with enough data. We must therefore collect more frames. Between those frames we should move around, otherwise we would be scanning the same point over and over again. Now we have a better coverage of the scene.

While we scanned many more points we didn't gain much in terms of detail. We have very good measurements of where all the scanned points are located with respect to the observer (i.e. the sensor). But we have no information where the sensor is at any given time relative to itself a moment ago.

If we know the path our sensor had traveled we can figure out where our measurements would fit in the world. If we know where our measurements would fit in the world we can figure out the path our sensor has traveled. But we know neither. This catch 22 is the heart of SLAM: Simultaneous Localisation and Mapping. We need to solve those 2 problems at once.

Simultaneous Localisation and Mapping

With SLAM we try to reconstruct the motion and pose of our sensor using the data we measured. We can add GPS and an additional Inertia Measurement Unit, or IMU, to provide us with a hint of our location, heading and velocity. But ultimately for a good result these technologies are nowhere near accurate enough, lack precision and experience drift. So for the finer registration we need to look at the actual LiDAR data.

To do this we must find information in our data that we can link together. I.e. find measurements on one frame we also find in other frames. We call these measurements 'features'. Only those measurements that stand out in some way, i.e. are distinct enough to be uniquely identified are useful to us. These features get assigned a descriptor. Descriptors can be used to compare two features and see what their distance is. Note: when we talk about distance here we do not mean their physical distance in the world which is unknown and precisely the problem we are tying to solve. But rather there distance in uniqueness. Two points with a low distance look very similar.

By carefully matching similar features between LiDAR frames we can compute how accurately our frames would fit over each other. This is called the frame error. By finding a transformation, such as translation and rotation that could have happened between the two frames we can reduce the error. Finally if our error is sufficiently close to zero, or we can't find a transformation with a lower error, we are done and can advance to the next frames.

By repeating the above process we build a mental model on how our sensor moved through the scene. Then, we can correct each measurement with our calculated vantage point and we are able to build a digital twin of our scene.

Advantages

- No setup required, no Surveying.

- Labor can continue during scan.

- High data collection rate, we can scan a scene in minutes rather than hours.

- As software advances earlier scans gain accuracy without revisiting the site.

Disadvantages

- Only objects visible (for the human eye) can be scanned.

- Translucent and reflective surfaces/objects can not be scanned (e.g. large bodies of water)